I'm a Computer Science researcher and engineer from Stony Brook University working on reliable agentic systems and multimodal tool use. I build evaluation benchmarks and perception-to-action pipelines that stay robust under real-world noise, and I study cross-lingual text comprehension in low-resource languages through reproducible, open research.

Education

Experience

Research Intern — UC Berkeley

Summer 2025Principal Investigator: Prof. Joseph E. Gonzalez

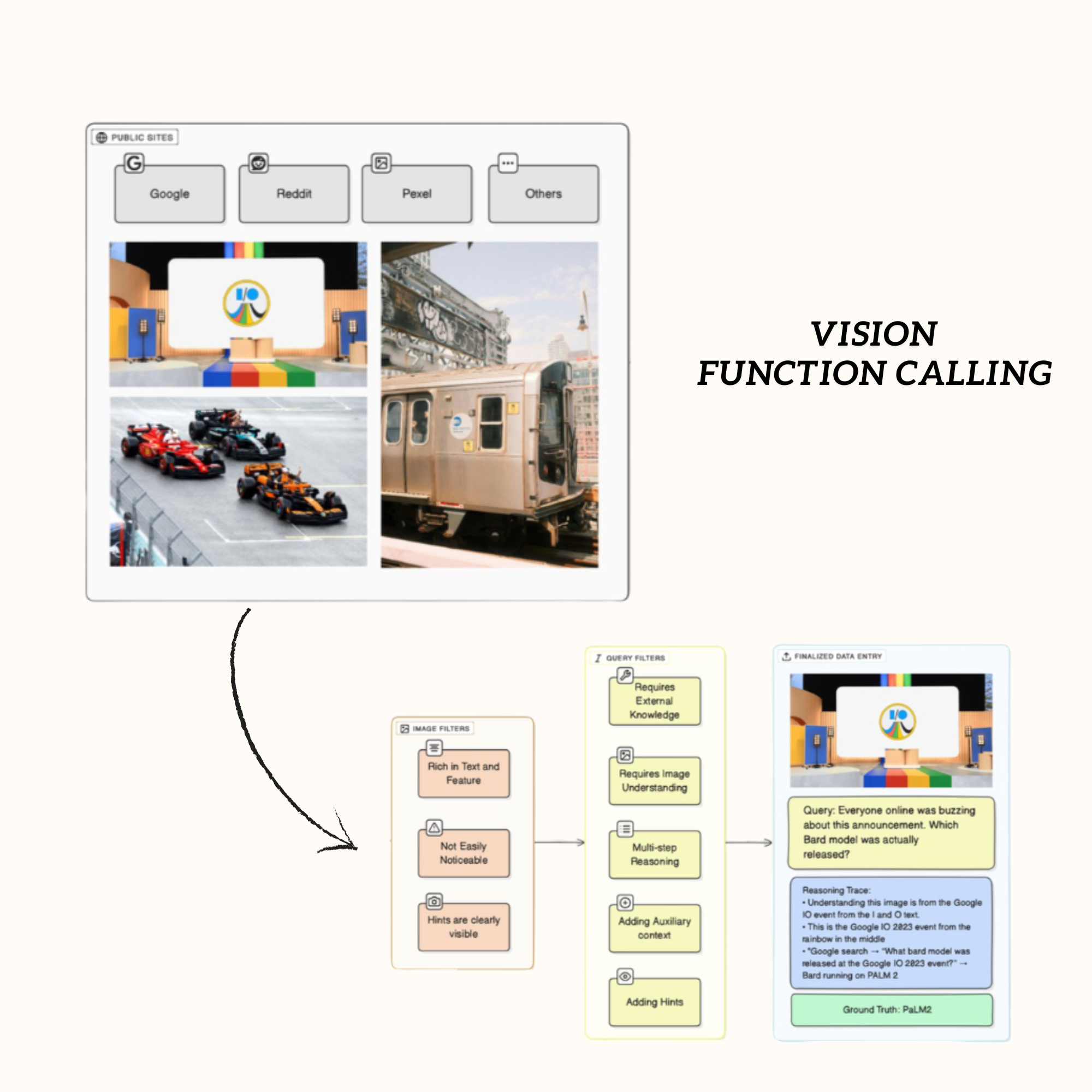

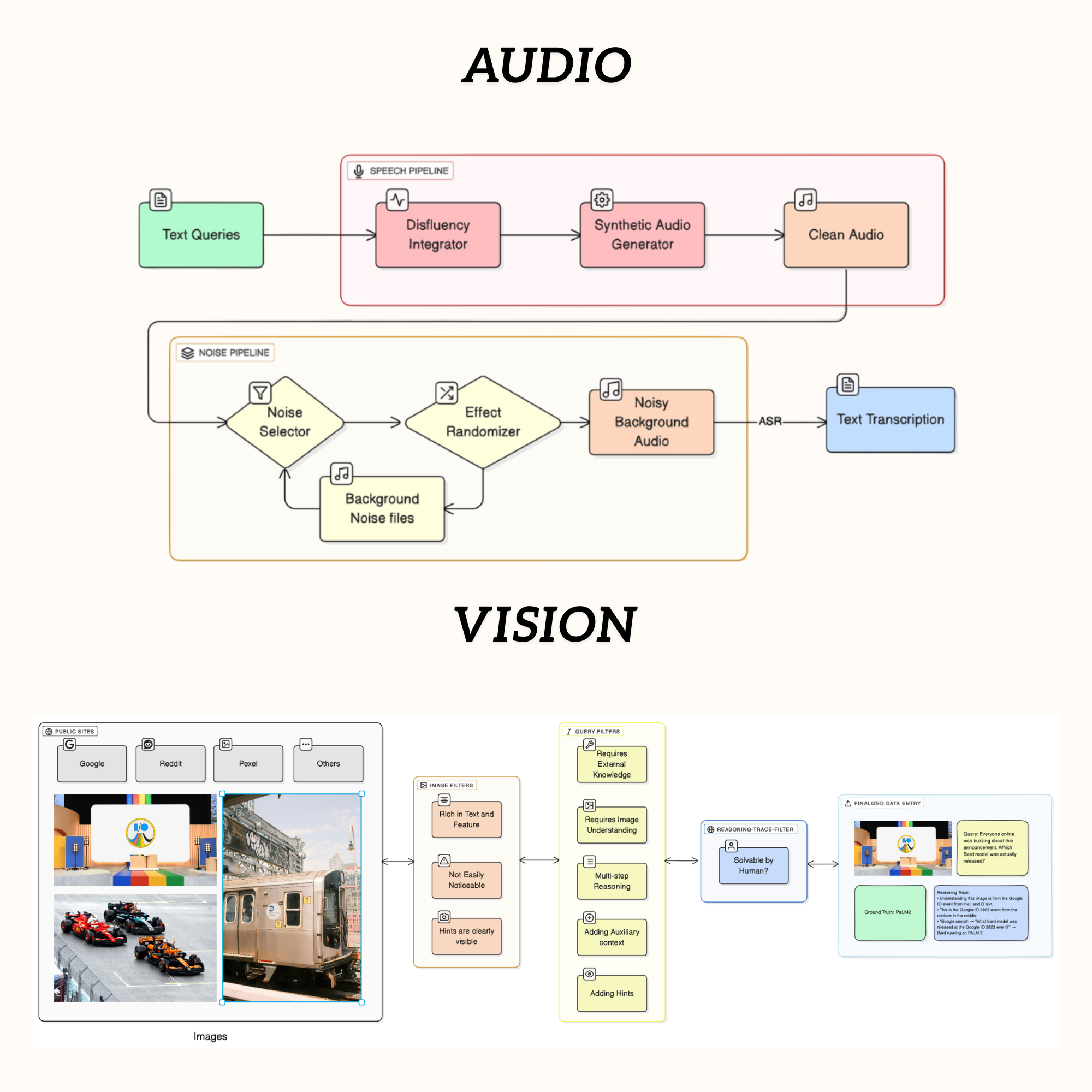

I expanded the Berkeley Function Calling Leaderboard (BFCL) to multi-modal evaluation by curating a 300-image dataset and wiring in audio and vision pipelines, enabling perception-to-action benchmarking beyond text. I also co-authored MFCL: Multi-modal Function Calling Evaluation for LLMs (ICLR 2026 under review), an 8.2K-task benchmark with controlled perturbations (noise, occlusion, disfluencies) that provides the first principled framework for diagnosing perception-to-tool-call failures in large language models.

Research Intern* — Carnegie Mellon University

Apr 2024 - Aug 2025Principal Investigator: Prof. Jack Mostow

I improved RoboTutor, a Java-based Android app that teaches literacy and numeracy in small communities, by adding a Placement Mode that promotes students to more challenging levels after they consistently ace several levels in a row. This created a smoother path for advanced learners, helping them progress faster and increasing user engagement by 25%.

Research Intern* — Stony Brook University

Jan 2025 - May 2025Principal Investigator: Prof. Ritwik Banerjee

Co-authored the CLEF 2025 paper “SCIRE at CheckThat! 2025”, proposing a unified framework for detecting and verifying scientific discourse on social media. Built a DeBERTa-v3-large multilabel classifier (F1 = 0.92) and a two-stage retrieval pipeline using Snowflake-Arctic dense retriever and MiniLM cross-encoder (MRR@5 = 0.65), ranking top 5 in the CheckThat! Lab leaderboard.

Research Intern — Stanford University

Summer 2024Principal Investigator: Prof. Nick Haber

Contributed to develop AirBlender, a Blender + LangChain + OmniGibson tool on NVIDIA Omniverse for natural-language 3D scene editing, schema automation, and physics-aware simulation. Core AirBlender modules were integrated into LayoutVLM with Stanford’s AI Lab (Prof. Nick Haber), supporting the interactive VLM pipeline with feedback queries, inpainting, and lighting control; accepted to CVPR 2025.

* denotes part time role

Publications

📝 Accepted at NeurIPS Workshop 2025 (NORA)

MFCL Vision: Benchmarking Tool Use in Multimodal Large Language Models for Visual Reasoning Tasks

Huanzhi Mao, Jad Bendarkawi*, Evan Turner*, Ritesh Chavan*

Presents the first benchmark that evaluates how well multimodal LLMs translate visual understanding into correct web tool calls under real-world visual noise and perturbations.

Under Review at ICLR 2026

MFCL: A Multi-Modal Function Calling Evaluation for Large Language Models

Huanzhi Mao, Aditya Ghai*, Jad Bendarkawi*, Imra Dawoodai*, Evan Turner*, Ritesh Chavan*, Zoir Imomaliev*, Antonio Ginart, Shishir G. Patil, John Emmons, Joseph E. Gonzalez.

Proposes a large-scale benchmark that systematically measures how well LLMs convert text, audio, and images into accurate function calls, isolating perception, reasoning, and formatting failures.

Under Review at ICLR 2026

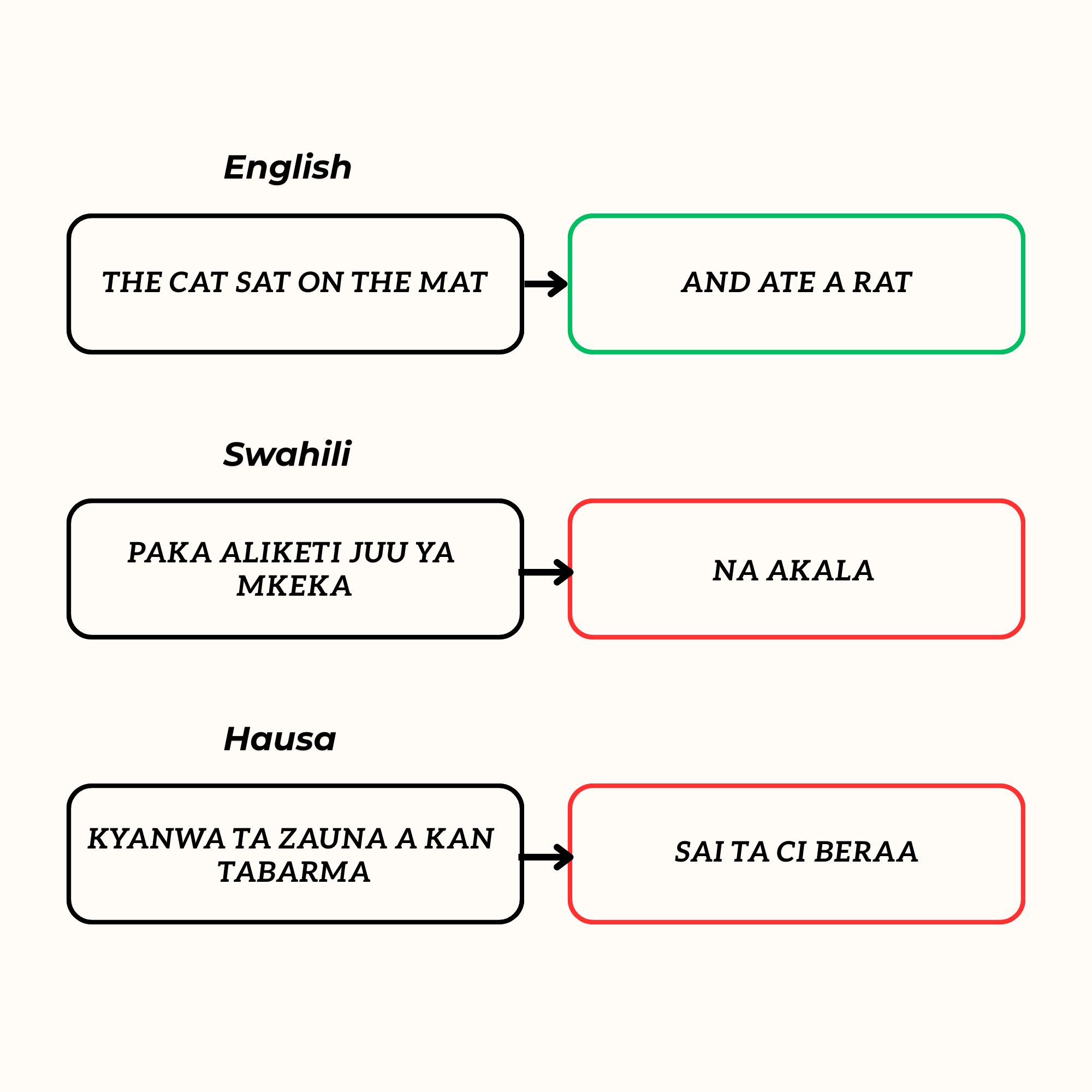

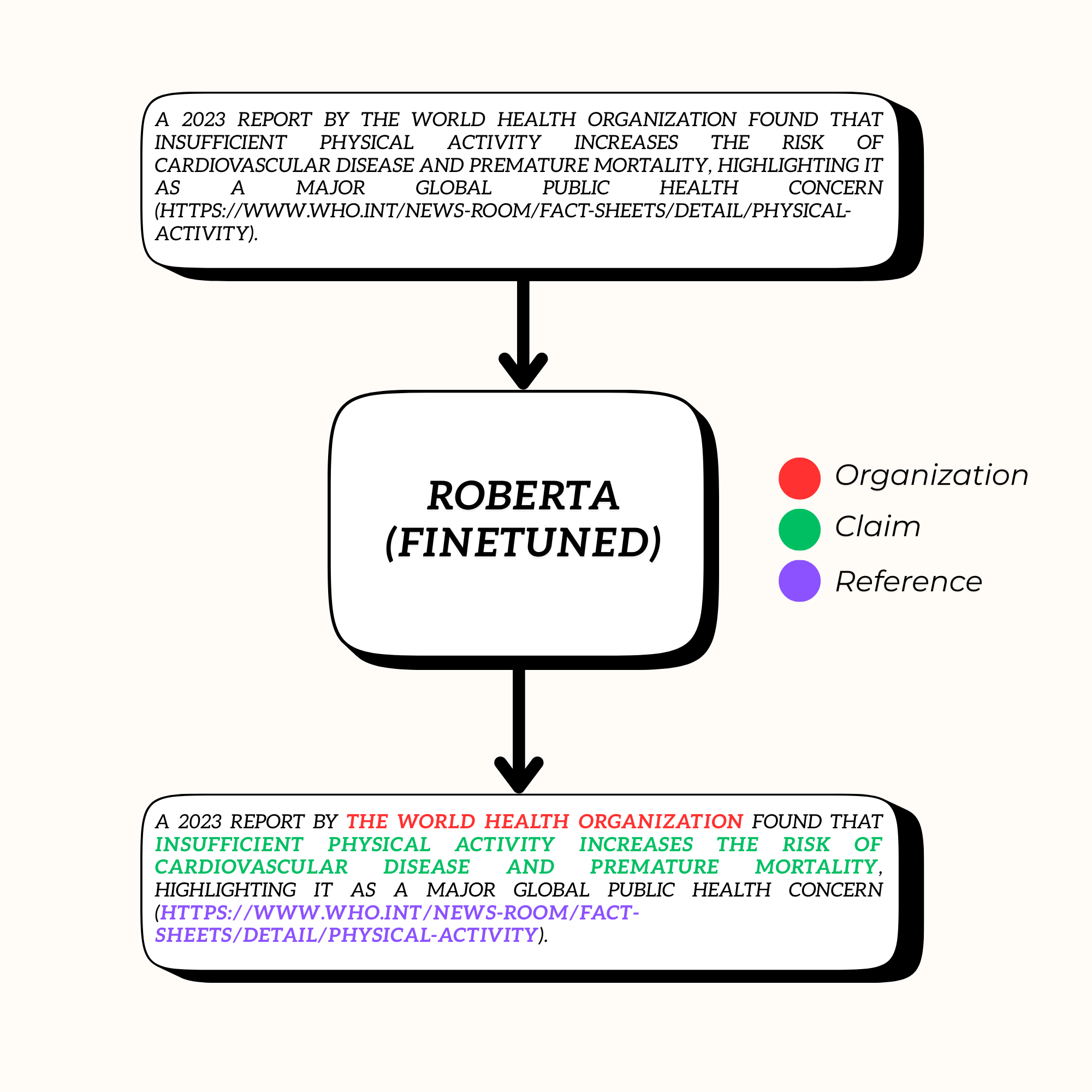

Testing cross-lingual text comprehension in LLMs using next sentence prediction

Ritesh Sunil Chavan, Jack Mostow

Shows that LLM comprehension drops sharply in low-resource languages and that Chain-of-Thought prompting helps weaker models but can hurt stronger ones in cross-lingual settings.

📝 Accepted as Working Notes at CLEF 2025

Bridging Social Media, Scientific Discourse, and Scientific Literature

Parth Manish Thapliyal, Ritesh Sunil Chavan*, Samridh Samridh*, Chaoyuan Zuo, Ritwik Banerjee

Introduces a unified system to detect scientific claims on social media and link implicit references to real research papers, enabling scalable verification of scientific discourse online.

* denotes equal contribution

Articles

Stony Brook Computing Society Wins at HackHarvard 2024 with Healthcare Innovation

The article reports that Stony Brook Computing Society won the “Best Healthcare” award at HackHarvard 2024 for creating Flexy: an app that uses pose detection to help users recover from injuries through guided physical-therapy exercises.

Stony Brook Computing Society wins “Best Healthcare” award at HackHarvard

The article reports that Stony Brook Computing Society won the “Best Healthcare” award at HackHarvard 2024 for their physical-therapy app Flexy (And I Know It), which helps users perform rehabilitative exercises using pose-detection and guided feedback.

Bridging Dreams: Stony Brook Computing Society Spends a Day at Google

The article describes how 25 students from the Stony Brook Computing Society were selected from over 200 applicants to visit the Google office in New York City, tour the campus, meet alumni working there, and participate in panels and hands-on labs about cloud computing and GenAI.